Post

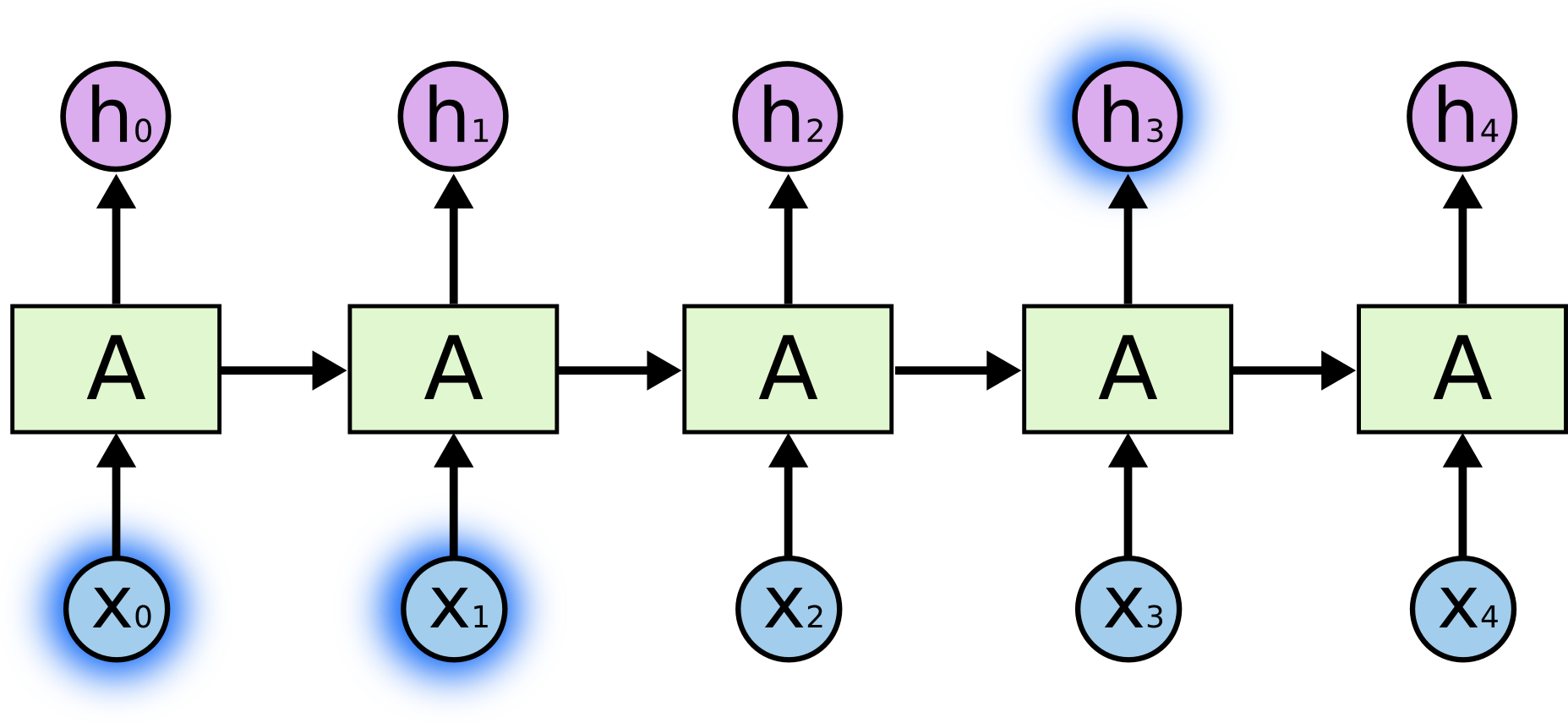

Originally shared by Google AIIn the last few years, there have been incredible success applying Recurrent Neural Networks (RNNs) to a variety of problems, such as speech recognition, language modeling, translation, image captioning and more.

Essential to these successes is the use of a very special kind of recurrent neural network architecture called Long Short Term Memory (LSTM), which accounts for long-term dependencies and relationships in data in order to produce amazing results.

But what are LTSMs, and how do they work? In a blog post, linked below, Google intern +Christopher Olah gives an overview of LSTMs, explaining why and how they work so well.

Essential to these successes is the use of a very special kind of recurrent neural network architecture called Long Short Term Memory (LSTM), which accounts for long-term dependencies and relationships in data in order to produce amazing results.

But what are LTSMs, and how do they work? In a blog post, linked below, Google intern +Christopher Olah gives an overview of LSTMs, explaining why and how they work so well.

Understanding LSTM Networks -- colah's blog

Shared with: Public

Reshared by: William Rutiser

This post was originally on Google+